Why Mainframe To Cloud Migration Challenges Cause 67% of Projects To Fail

Mainframe to cloud migration challenges often stem from a fundamental architectural conflict: mainframes and cloud environments operate on entirely different principles. This conflict creates significant issues with data fidelity, performance latency, and cost unpredictability. These are not abstract risks; they are the primary drivers behind project failure.

Why Many Mainframe Migrations Fail

Vendors often present a simplified narrative of seamless transition and significant cost savings. The operational reality is more complex. A 2023 Forrester report found that 67% of COBOL migration projects fail to meet their stated objectives.

This failure rate isn’t due to a single cause but rather an accumulation of technical, financial, and organizational miscalculations.

A common misstep is treating the project as a simple infrastructure swap. A mainframe is not just a large server suitable for a lift-and-shift approach. It’s a highly integrated ecosystem, optimized over decades for high-volume transaction processing with minimal latency. Moving that workload to the cloud without fundamental re-architecture is a common precursor to failure.

The Core Failure Points

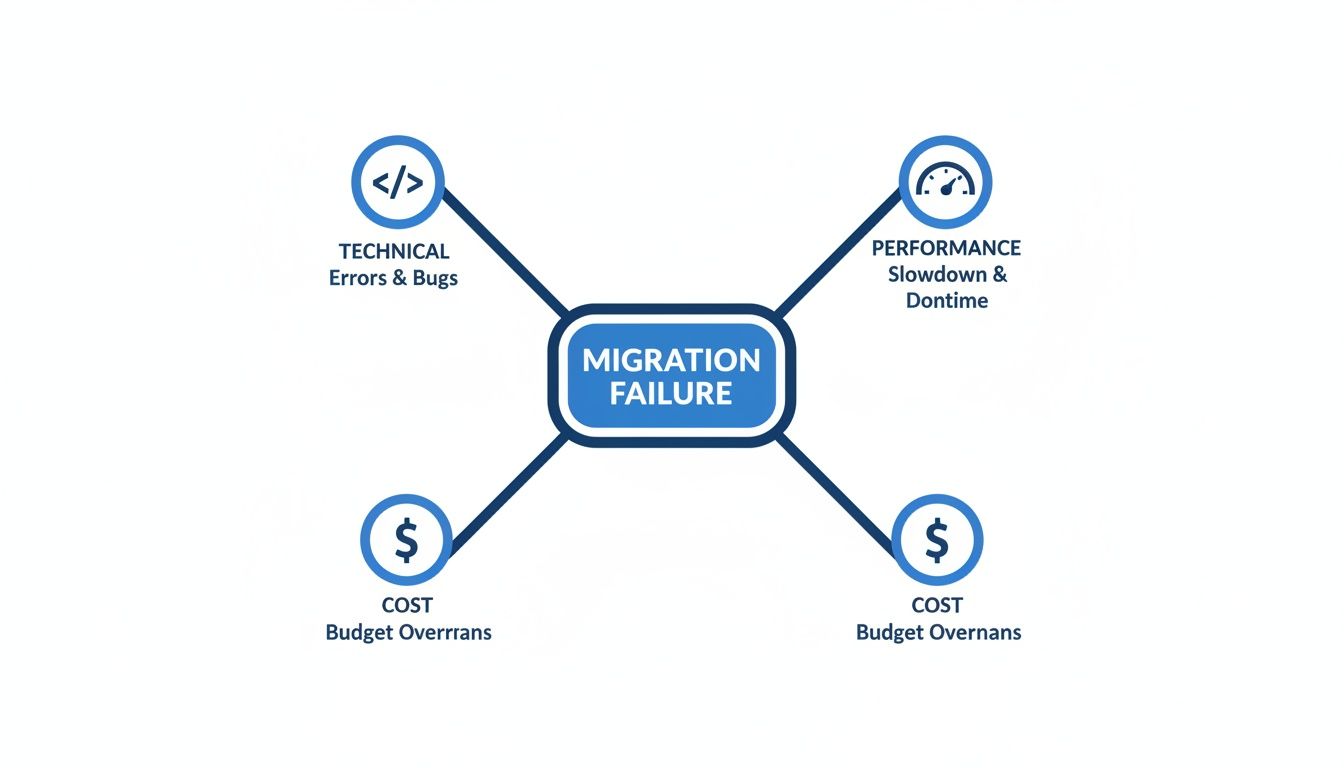

The issues that derail these projects are typically technical in origin but often trigger cascading financial consequences. These problems are rarely visible in high-level project plans but become evident during testing or post-deployment.

- Technical Breakdown: Data corruption is a frequent outcome. A common error is the incorrect handling of COBOL’s fixed-point COMP-3 data types during conversion. This can result in silent, incremental rounding errors in financial calculations, corrupting critical business data for months before detection.

- Performance Collapse: Mainframe applications are designed for sub-millisecond I/O. When these tightly-coupled workloads are moved to a distributed cloud environment, network latency becomes a significant performance bottleneck. Batch jobs that previously took an hour might extend to eight hours, missing critical processing windows and disrupting business operations.

- Financial Miscalculation: The business case often underestimates the true cost of refactoring decades of legacy code. The variable nature of cloud consumption pricing introduces another layer of financial risk. A project budgeted for $5M can escalate to $15M when unforeseen complexities arise and cloud service consumption exceeds initial estimates.

These issues are interconnected and can create a negative feedback loop.

Technical debt can directly contribute to performance degradation and budget overruns.

Based on an analysis of over 200 migration post-mortems, the failure points show a high degree of consistency. The top five are detailed below.

Top 5 Mainframe Migration Failure Points

| Challenge Area | Primary Failure Driver | Typical Impact |

|---|---|---|

| Data Integrity | Incorrect handling of legacy data types (EBCDIC, COMP-3) during conversion. | Silent data corruption in financial or customer records, leading to catastrophic business errors post-launch. |

| Performance | Ignoring the impact of network latency on applications built for low-latency I/O. | Batch jobs miss processing windows; online transaction response times increase from <1s to 5-10s. |

| Testing | Inability to create a production-scale test environment due to data volume and complexity. | ”Successful” tests in dev environments fail spectacularly under real-world load, forcing a rollback. |

| Cost Overruns | Underestimating the manual effort required to refactor or rewrite complex business logic. | A 12-month, $5M project turns into a 36-month, $15M+ ordeal with no end in sight. |

| Integration | Failure to map and replicate hundreds of upstream/downstream integrations. | The new cloud system goes live, but critical systems that depend on it (e.g., reporting, billing) break. |

This data suggests that success is contingent on diligent, upfront analysis. A thorough risk assessment can identify these issues before a multi-year, high-stakes program is initiated.

A detailed cloud migration risk assessment framework can help structure this initial work.

Navigating The One-Way Street of Mainframe Migration

A critical aspect of mainframe migration is its near-irreversibility. Unlike many IT projects where rollback is a viable contingency, moving core mainframe systems to the cloud is a fundamentally transformative event.

Once hardware is decommissioned, data formats are altered, and teams are retrained, repatriation becomes technically and financially prohibitive. This puts significant pressure on engineering leaders to ensure the initial decision is sound. A failed migration can result in a high-cost, low-performance state with no simple path back to the stability of the mainframe environment.

Why Repatriation Is So Rare

Even when cloud projects encounter significant issues, few organizations move applications back to the mainframe. The 2025 Arcati Mainframe Survey indicates that while 48% of organizations are considering migrating workloads off their mainframes, the reality of returning is complex.

Only 24% of companies that completed a migration ever repatriated any workloads. A significant 60% reported zero movement back to the mainframe. This is not about organizational pride but rather the technical and financial barriers that make the return journey impractical.

- Irreversible Data Transformation: During migration, data is permanently altered. Legacy formats like EBCDIC are converted to ASCII, and packed decimal fields (COMP-3) are often changed to floating-point numbers. Reversing this process without corrupting transactional data accumulated post-migration is a high-risk undertaking.

- Architectural Entanglement: The application becomes deeply integrated with the cloud ecosystem, utilizing managed databases, serverless functions, and object storage. Decoupling these dependencies for an on-premises deployment would require a second, equally complex modernization project.

- Skills Atrophy and Decommissioning: Once the mainframe is decommissioned, specialized staff are typically reassigned or retire, leading to a loss of institutional knowledge. Re-establishing the required hardware and human expertise is a significant challenge.

This dynamic can create a “sunk cost fallacy,” where organizations continue to invest in a failing cloud strategy because the perceived cost and complexity of repatriation seem even greater.

The decision to migrate a core mainframe system is one of the highest-stakes choices a technical leader can make. The lack of a viable rollback option means the pre-migration analysis must be exhaustive, skeptical, and brutally honest about the potential mainframe to cloud migration challenges.

The Strategic Imperative for Due Diligence

The one-way nature of this transition elevates the importance of pre-migration due diligence from a planning exercise to a strategic imperative. Data-driven answers to difficult questions are required before commitment.

Key Questions for Your Pre-Migration Analysis:

- Performance Equivalence: Has a production-scale proof-of-concept demonstrated that the cloud architecture can match the sub-millisecond I/O and transactional throughput of the mainframe for your specific workload? Vendor assurances are insufficient.

- Data Fidelity: Is there a field-by-field plan for data conversion? Have parallel tests been run to guarantee 100% data fidelity for critical financial and customer data?

- True Cost of Ownership: Does the financial model account for the variability of cloud consumption? Is the budget sufficient to cover specialized migration talent and a contingency for refactoring complexities?

To navigate this process and avoid common pitfalls, it is crucial to adopt proven Cloud Migration Best Practices from the project’s inception. Failure to rigorously validate these points before starting increases the risk of project failure.

Deconstructing Technical Failure at the Code Level

While high-level strategic errors can set a project on the wrong path, the ultimate failure of a mainframe migration often occurs at the code level. This is where architectural diagrams meet the reality of a 40-year-old system.

The costliest migration failures are not conceptual; they are technical bugs that can silently corrupt financial data and degrade performance.

The Decimal Precision Time Bomb

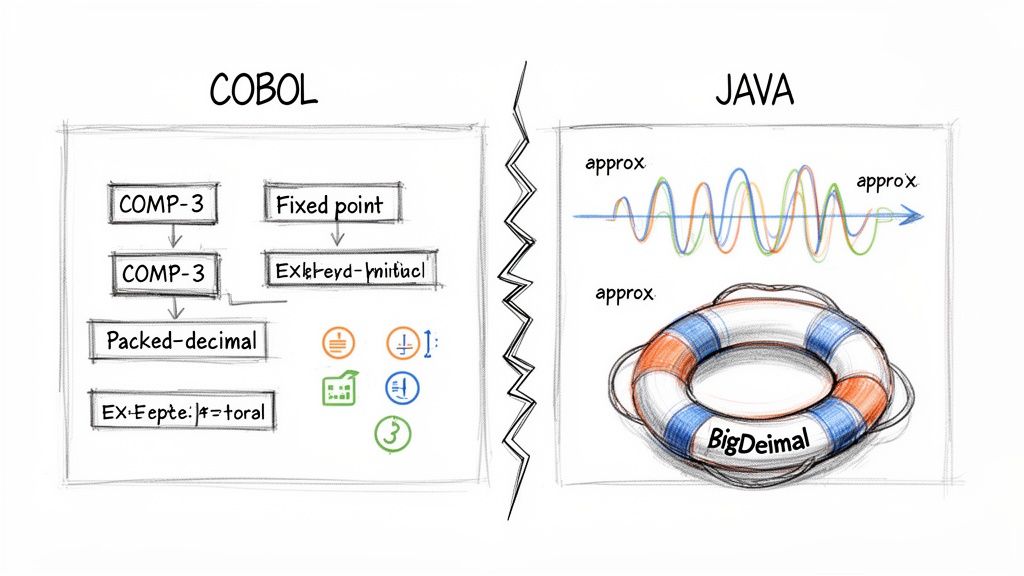

A common and significant error is the mishandling of numerical data types. An estimated 67% of COBOL migration failures can be traced back to incorrect decimal precision handling. This is not a simple rounding error but a systemic flaw that can compromise financial records.

Mainframes, particularly in banking and insurance, rely heavily on COBOL’s COMP-3 data type, also known as packed decimal. It uses fixed-point arithmetic, storing numbers with a precise, predetermined number of decimal places, which is essential for financial calculations.

Modern languages like Java often default to floating-point numbers (float and double). These are suitable for scientific applications but problematic for financial math because they store numbers as approximations. This can introduce small errors that compound over millions of transactions.

A $100.00 transaction, when stored in a floating-point variable, might be represented as 99.99999999999999. While seemingly trivial, the cumulative effect of such fractional discrepancies across millions of transactions can lead to a multi-million-dollar reconciliation issue.

A specific approach is required to manage this risk.

- In Java: Use the

BigDecimalclass for all financial calculations. This class correctly mimics the fixed-point behavior of COBOL COMP-3. - In Python: The

Decimalmodule serves the same purpose, ensuring the integrity of monetary data.

Any vendor or consultant suggesting the use of standard floating-point types for financial data may lack the necessary mainframe expertise.

The Latency Chasm Between Mainframe I/O and Cloud Storage

Another common failure point arises from a misunderstanding of I/O performance. Mainframe applications were architected with the expectation of microsecond-level data access, as storage is physically co-located with the processor.

Cloud platforms are, by design, distributed systems. When a mainframe application is moved to the cloud, a simple data read becomes a network call from a compute instance to a managed service like Amazon DynamoDB or Azure Cosmos DB. This introduces network latency.

The impact can be substantial.

- An online transaction that took 500 milliseconds on the mainframe can increase to 5 seconds in the cloud.

- A batch job processing a million records in one hour might now take 10 hours, potentially missing critical business processing windows.

This is an architectural problem, not a resource problem. Addressing it requires significant engineering. For instance, migrating high-speed VSAM data to a NoSQL database like DynamoDB necessitates complex sharding and partitioning strategies, as used by Itaú to maintain 99.99% uptime for 70 million clients. The complexity of modernizing legacy code often requires specialized tooling; AWS Transform is reported to have reduced timelines by 3x for Fiserv and BMW on projects involving millions of lines of code. Further insights can be found in the latest state of the mainframe reports.

Without rigorous performance modeling and load testing that reflects production volumes, these latency issues are often discovered too late in the project lifecycle.

The Unpredictable Economics of Cloud Migration

The business case for mainframe migration often centers on replacing high, fixed operational costs with lower, variable cloud expenses. This Total Cost of Ownership (TCO) calculation can be compelling but frequently fails to account for real-world complexities.

The economics of these projects are often unpredictable and can lead to significant budget overruns.

The cost of the migration itself is a major variable. Code refactoring for legacy COBOL applications is not a straightforward task. While a vendor may provide an initial estimate, the final cost is driven by complexity, not just the volume of code. Every unforeseen dependency, undocumented business rule, and data conversion challenge can cause the initial estimate to increase.

The Volatility of Refactoring Costs

The financial volatility of these projects is a significant risk. A successful migration can deliver a 362% ROI, but the path is not without financial obstacles. The cost of COBOL migration services typically falls between $1.50 and $4.00 per line of code.

However, with a project failure rate of 67%, often due to technical miscalculations, the initial budget is more of an estimate than a fixed cost. This is exacerbated by a skills shortage, which has contributed to a 122% increase (to 20%) in the outsourcing of mainframe management, directly impacting talent costs.

A one-million-line COBOL application might receive an initial refactoring quote of $2.5 million. If 10% of that code is found to be highly complex and undocumented, senior architects may need weeks for reverse-engineering. The cost for that segment alone could double, pushing the total project cost over $3 million before testing begins.

This is why fixed-bid contracts are rare for such projects. The industry standard is Time & Materials (T&M), which transfers the financial risk to the client.

Post-Migration Bill Shock

Even if the migration project stays within budget, a significant financial surprise can occur post-launch. “Bill shock” is a common outcome, resulting from a fundamental mismatch in design philosophies.

Mainframe workloads are designed to run at peak capacity continuously. Cloud platforms are designed for elasticity and a pay-per-use model. Migrating a mainframe workload to the cloud without altering its behavior can lead to inefficient resource consumption.

Key drivers of unexpected costs include:

- Inefficient Architecture: A “lift and shift” migration results in a cloud application that operates like a mainframe application, consuming high levels of CPU and memory 24/7, leading to large monthly bills.

- Data Egress Fees: Mainframe applications frequently move large datasets between systems. In the cloud, transferring data out of a region or service incurs costs. These egress fees can add tens of thousands of dollars to monthly expenses.

- Overprovisioning: To mitigate performance risks, teams often select cloud instances that are larger and more expensive than necessary. Without aggressive performance tuning, this becomes a permanent operational cost.

Managing these new, variable expenses requires a new financial discipline. Implementing robust FinOps best practices from the outset is essential to avoid these issues.

The Inflated Cost of Scarce Talent

The human capital cost is another significant economic variable. The pool of engineers with expertise in both mainframe technologies (COBOL, CICS, JCL) and modern cloud architectures is small, and their services are expensive.

For most organizations, building an in-house team with this specific skill set is not feasible. This scarcity has created a seller’s market for specialized consultancies. The high daily rates for mainframe migration experts can inflate project budgets and extend timelines.

Underestimating this human capital cost is a common reason for budget overruns. For more on managing these expenses, refer to our guide on cloud cost optimization strategies.

A Framework for Vetting Migration Partners

Selecting the right implementation partner is one of the most critical decisions in a mainframe-to-cloud migration.

The market includes many vendors claiming relevant expertise, but a generic “cloud migration” background is often insufficient for the specific challenges of mainframe modernization. Choosing an inappropriate partner can lead to inflated costs and project failure.

Engineering leaders require a data-backed method to evaluate potential partners. This involves going beyond marketing materials to ask specific technical questions that reveal a vendor’s true experience, including their experience with project failures. The objective is to find a partner whose expertise aligns with the specific technical characteristics of your systems.

Beyond the Sales Pitch

The vetting process should be a technical deep-dive. A credible partner will welcome this level of scrutiny.

A vendor that becomes defensive, provides vague answers, or claims a 100% success rate is a red flag. Transparency about past failures is an indicator of maturity and experience.

Focus your questions on four critical areas to assess a partner’s capabilities.

Technical Specialization and Data Fidelity

Not all mainframe workloads are identical. A partner with experience in retail migrations may lack the specific knowledge of data types, such as COMP-3 packed decimals, required for financial services. The initial line of questioning must validate their niche expertise.

- Critical Question: “Show me three anonymized case studies of migrating COBOL applications with COMP-3 data fields for a financial services client. I want to see the testing reports that prove 100% data reconciliation.”

- Red Flag: The vendor presents generic “cloud migration” case studies or cannot produce detailed, data-type-specific evidence. They discuss “moving data” but are evasive when asked about preserving its mathematical integrity.

This level of detail is critical. More questions can be found in our comprehensive technical due diligence checklist.

Performance Engineering and Latency Mitigation

A common failure is a partner’s inability to re-architect for performance. Mainframe applications are built for sub-millisecond I/O. A partner who only discusses cloud scalability while downplaying the impact of network latency on tightly coupled applications is a significant risk.

The most dangerous vendors are those who treat performance as a simple matter of choosing a larger cloud instance. True experts will discuss sharding strategies for VSAM-to-DynamoDB migrations, performance modeling for CICS transactions, and methodologies for ensuring batch windows don’t get blown.

They should be able to articulate how they will address the performance degradation that is one of the most common mainframe to cloud migration challenges.

Failure Transparency and Methodology Evolution

Every experienced vendor has encountered project challenges. Their willingness to discuss these failures is an indicator of their integrity. A partner who has never failed has likely never undertaken a truly complex project.

Ask them directly: “Describe a past migration project that was rolled back or failed to meet its objectives. What were the specific root causes, and how has your methodology evolved to prevent that from happening again?” A confident partner will provide a clear, non-defensive answer that demonstrates organizational learning.

Cost Model Transparency

Scrutinize their cost model. A simple per-line-of-code (LOC) estimate is a marketing tool, not a serious financial projection. It ignores the real cost drivers: code complexity, data migration effort, and testing.

A credible partner will provide a detailed cost breakdown, distinguishing between fixed-fee and time-and-materials components. They should be able to identify the top three drivers of budget overruns they’ve seen in past projects. If their proposal is vague and lacks a clear risk-adjusted contingency, they are shifting all financial uncertainty to you.

Vendor Vetting Checklist: Key Evaluation Criteria

Use this checklist to systematically evaluate and compare mainframe migration implementation partners based on technical specialization and past performance.

| Evaluation Category | Critical Question for Vendor | Red Flag |

|---|---|---|

| Niche Technical Proof | Show me proof of migrating workloads with my specific technologies (e.g., COBOL with COMP-3, CICS transactions, VSAM). | They show generic “cloud migration” case studies or can’t provide data-specific reconciliation reports. |

| Performance Strategy | How will you model and mitigate network latency for our tightly coupled, sub-millisecond response time applications? | Their only answer is “choose a bigger cloud instance.” They don’t mention re-architecting, sharding, or performance modeling. |

| Failure Transparency | Describe a past project that failed or was rolled back. What did you learn and how did you change your process? | They claim a 100% success rate or get defensive. A lack of failure stories often means a lack of complex experience. |

| Cost Model Detail | Provide a detailed cost breakdown. What are the top 3 drivers of budget overruns you’ve seen on similar projects? | They offer a flat per-line-of-code (LOC) price or a vague proposal that shifts all risk onto your budget. |

A rigorous vetting process is your best defense against project failure. A partner who can confidently and transparently answer these questions is likely to have the necessary experience.

When to Modernize on the Mainframe Instead

The “cloud-first” approach is not universally applicable. Migrating every legacy system to the cloud can be a technically flawed and financially irresponsible decision.

Understanding the significant mainframe to cloud migration challenges includes knowing when to reject a full migration. For some core business functions, the centralized processing power of the mainframe remains superior. Forcing these workloads into a distributed cloud environment can create more problems than it solves, particularly around performance and cost. The objective should be business value, not cloud adoption for its own sake.

Scenarios Ill-Suited for Cloud Migration

Certain workloads are a poor fit for the cloud. If your systems exhibit these characteristics, a full migration is a high-risk endeavor. In these cases, modernizing on the existing platform is often the more prudent strategy. This is not about resisting innovation but about making a calculated decision to avoid predictable failure.

Red flags that suggest staying on the mainframe include:

- High-Volume Synchronous Batch Processing: If your business relies on processing millions of transactions within a tight overnight window, the mainframe’s I/O capabilities are difficult to replicate in the cloud. The inherent latency of cloud storage and networking can lead to missed SLAs and budget overruns.

- Extreme Low-Latency Requirements: For applications requiring sub-millisecond response times, such as high-frequency trading or real-time payment authorizations, the physics of network round-trips in a distributed cloud make it nearly impossible to match the performance of a co-located mainframe application.

- Deeply Entangled Monoliths: For systems with hundreds of dependencies, the risk and cost of disentanglement can be prohibitive. Such a project can become a multi-year, multi-million-dollar effort with no clear ROI, negating any potential benefits of the cloud.

The Rise of the Hybrid Mainframe Model

Choosing not to pursue a full cloud migration does not mean maintaining the status quo. The modern mainframe is not an isolated system; it can be a component of a hybrid IT architecture.

A hybrid approach allows you to leverage the strengths of both platforms. Core processing remains on the mainframe, while its data and functions are exposed through modern APIs.

Modernizing on the mainframe allows you to retain your most reliable processing asset while selectively adopting cloud-native practices. This involves an API-first strategy and even containerization, not a wholesale migration. This approach mitigates the risk of a “big bang” project failure.

Organizations are already implementing this strategy by running containerized applications on LinuxONE, deploying APIs to connect mobile apps with core banking systems, and running AI/ML models directly on the mainframe to analyze transaction data in real-time.

This incremental, lower-risk path aligns technology strategy with business reality and the unique strengths of the mainframe platform.

Frequently Asked Questions About Mainframe Migration

What Is the Single Biggest Technical Mistake in a Mainframe Migration?

The most critical technical mistake is incorrect data conversion, specifically the loss of precision when converting mainframe packed decimal formats like COBOL’s COMP-3 into standard floating-point numbers in languages like Java or Python.

This error is not immediately obvious; it manifests as a silent corruption that introduces small rounding errors into financial calculations. It is often not detected until user acceptance testing, by which point significant rework is required, potentially jeopardizing the entire project.

Using appropriate data types, such as Java’s BigDecimal class, from the outset is non-negotiable for maintaining data fidelity.

How Can I Accurately Forecast the Cost of a Mainframe Migration?

Accurate cost forecasting is challenging, which contributes to budget overruns. A baseline can be established using a per-line-of-code (LOC) estimate, which for COBOL is typically between $1.50 and $4.00 per line.

However, this figure must be adjusted for code complexity, the number of integrations, and data volume.

The most reliable way to forecast costs is to conduct a small-scale, paid proof-of-concept (POC) with a potential vendor. Migrating a representative slice of an application provides real-world data on costs and effort, which can be used to build a more accurate financial model for the full project.

Is a Lift and Shift Migration a Viable Strategy for Mainframes?

Almost never. The “lift and shift” (rehosting) approach, which involves running the mainframe environment on an emulator in a cloud VM, rarely delivers the desired outcomes.

This strategy carries over all existing technical debt and the monolithic architecture. The primary change is the shift to a variable, and often unpredictable, cloud billing model.

Real value is typically derived from refactoring or re-platforming the application to modernize the code and architecture, enabling the use of cloud-native services. Rehosting is often a costly detour that fails to address the core problems.

Modernization Intel gives technical leaders the unvarnished truth about implementation partners so you can make defensible vendor decisions. Explore our research and get your vendor shortlist at https://softwaremodernizationservices.com.

Need help with your modernization project?

Get matched with vetted specialists who can help you modernize your APIs, migrate to Kubernetes, or transform legacy systems.

Browse Services